Techniques for effective prompt engineering

In the past two years, a wide array of prompt -engineering techniques have been developed. This section focuses on the essential ones, offering key strategies that you might find indispensable for daily interactions with ChatGPT and other LLM-based applications.

N-shot prompting

N-shot prompting is a term used in the context of training large language models, particularly for zero-shot or few-shot learning tasks. It is also called in-context learning and refers to the techniqueof providing the model with example prompts along with corresponding responses during training to steer the model’s behavior to provide more accurate responses.

The “N” in “N-shot” refers to the number of example prompts provided to the model. For instance, in a one-shot learning scenario, only one example prompt and its response are given to the model. In an N-shot learning scenario, multiple example prompts and responses are provided.

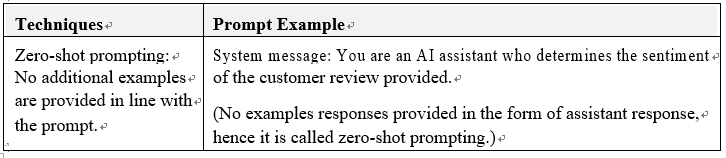

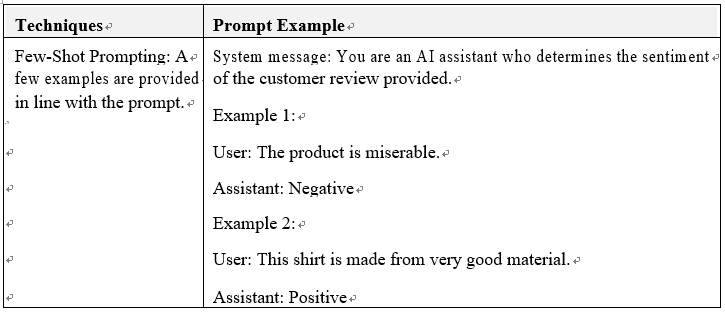

While ChatGPT works great with zero-shot prompting, it may sometimes be useful to provide examples for a more accurate response. Let’s see some examples of zero-shot and few-shot prompting:

Figure 5.8 – N-shot prompting examples

Chain-of-thought (CoT) prompting

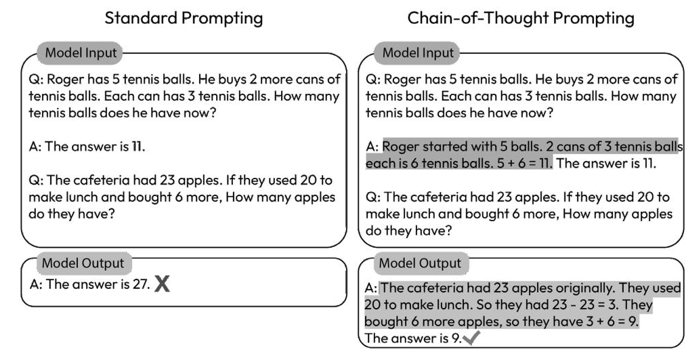

Chain-of -thought prompting refers to a sequence of intermediate reasoning steps, significantly boosting the capability of large language models to tackle complex reasoning tasks. By presenting a few chain-of-thought demonstrations as examples in the prompts, the models proficiently handle intricate reasoning tasks:

Figure 5.9 – Chain-of-Thought Prompting Examples

Figure sourced from https://arxiv.org/pdf/2201.11903.pdf.

Program-aided language (PAL) models

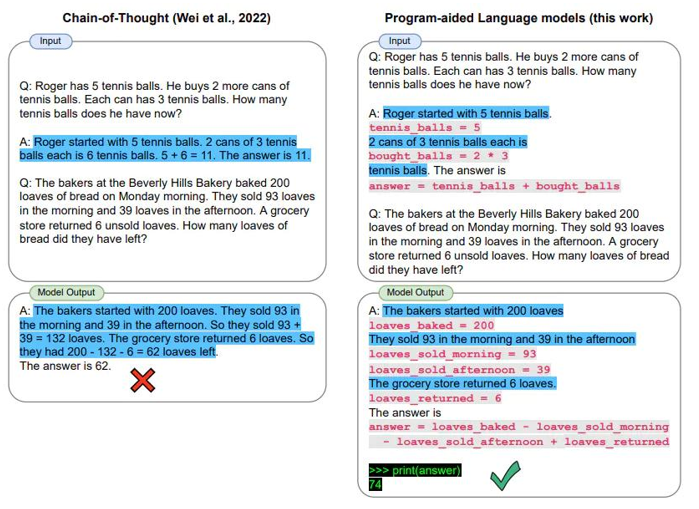

Program- aided language (PAL) models, also called program-of -thought prompting ( PoT), is a technique that incorporates additional task-specific instructions, pseudo-code, rules, or programs alongside the free-form text to guide the behavior of a language model:

Figure 5.10 – Program-aided language prompting examples

Figure sourced from https://arxiv.org/abs/2211.10435.

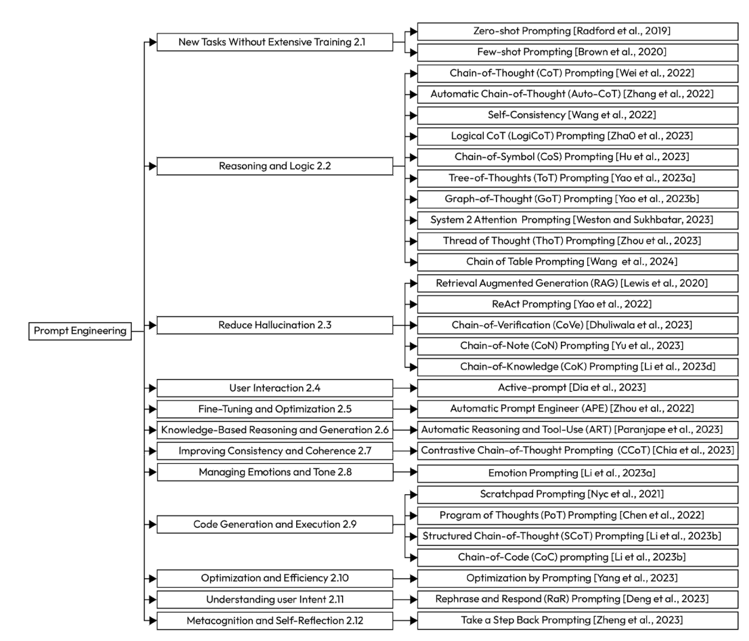

In this section, although we have not explored all prompt engineering techniques (only the most important ones), we want to convey to our readers that there are numerous variants of these techniques, as illustrated in the following figure from the research paper A Systematic Survey of prompt engineering in Large Language Models: Techniques and Applications (https://arxiv.org/pdf/2402.07927. pdf). This paper provides an extensive inventory of prompt engineering strategies across various application areas, showcasing the evolution and breadth of this field over the last four years:

Figure 5.11 – Taxonomy of prompt engineering techniques across multiple application domains