The essentials of prompt engineering

Before discussing prompt engineering, it is important to first understand the foundational components of a prompt. In this section, we’ll delve into the key components of a prompt, such as ChatGPT prompts, completions, and tokens. Additionally, grasping what tokens are is pivotal to understanding the model’s constraints and managing costs.

ChatGPT prompts and completions

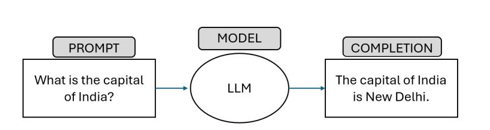

A prompt is an input provided to LLMs, whereas completions refer to the output of LLMs. The structure and content of a prompt can vary based on the type of LLM (e.g., the text or image generation model), specific use cases, and the desired output of the language model.

Completions refer to the response generated by ChatGPT prompts; basically, it is an answer to your questions. Check out the following example to understand the difference between prompts and completions when we prompt ChatGPT with, “What is the capital of India?”

Figure 5.2 – An image showing a sample LLM prompt and completion

Based on the use case, we can leverage one of the two ChatGPT API calls, named Completions or ChatCompletions, to interact with the model. However, OpenAI recommends using the ChatCompletions API in the majority of scenarios.

Completions API

The Completions API is designed to generate creative, free-form text. You provide a prompt, and the API generates text that continues from it. This is often used for tasks where you want the model to answer a question or generate creative text, such as for writing an article or a poem.

ChatCompletions API

The ChatCompletions API is designed for multi-turn conversations. You send a series of messages instead of a single prompt, and the model generates a message as a response. The messages sent to the model include a role (which can be a system, user, or assistant) and the content of the message. The system role is used to set the behavior of the assistant, the user role is used to instruct the assistant, and the model’s responses are under the assistant role.

The following is an example of a sample ChatCompletions API call:

import openai

openai.api_key = ‘your-api-key’

response = openai.ChatCompletion.create(

model=”gpt-3.5-turbo”,

messages=[

{“role”: “system”, “content”: “You are a helpful sports \

assistant.”},

{“role”: “user”, “content”: “Who won the cricket world cup \

in 2011?”},

{“role”: “assistant”, “content”: “India won the cricket \

world cup in 2011″},

{“role”: “assistant”, “content”: “Where was it played”}

]

)

print(response[‘choices’][0][‘message’][‘content’])

The main difference between the Completions API and ChatCompletions API is that the Completions API is designed for single-turn tasks, while the ChatCompletions API is designed to handle multiple turns in a conversation, making it more suitable for building conversational agents. However, the ChatCompletions API format can be modified to behave as a Completions API by using a single user message.

Important note

The CompletionsAPI, launched in June 2020, initially offered a freeform text interface for Open AI’s language models. However, experience has shown that structured prompts often yield better outcomes. The chat-based approach, especially through the ChatCompletions API, excels in addressing a wide array of needs, offering enhanced flexibility and specificity and reducing prompt injection risks. Its design supports multi-turn conversations and a variety of tasks, enabling developers to create advanced conversational experiences. Hence, Open AI announced that they would be deprecating some of the older models using Completions API and, in moving forward, they would be investing in the ChatCompletions API to optimize their efforts to use compute capacity. While the Completions API will remain accessible, it shall be labeled as “legacy” in the Open AI developer documentation.

Tokens

Understanding the concepts of tokens is essential, as it helps us better comprehend the restrictions, such as model limitations, and the aspect of cost management when utilizing ChatGPT.

A ChatGPT token is a unit of text that ChatGPT’s language model uses to understand and generate language. In ChatGPT, a token is a sequence of characters that the model uses to generate new sequences

of tokens and form a coherent response to a given prompt. The models use tokens to represent words, phrases, and other language elements. The tokens are not cut where the word starts or ends but can consist of trailing spaces, sub words and punctuations, too.

As stated on the OpenAI website, tokens can be thought of as pieces of words. Before the API processes the prompts, the input is broken down into tokens.

To understand tokens in terms of lengths, the following is used as a rule of thumb:

- 1 token ~= 4 chars in English

- 1 token ~= ¾ words

- 100 tokens ~= 75 words

- 1–2 sentences ~= 30 tokens

- 1 paragraph ~= 100 tokens

- 1,500 words ~= 2048 tokens

- 1 US page (8 ½” x 11”) ~= 450 tokens (assuming ~1800 characters per page)

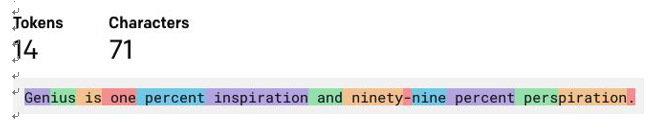

For example, this famous quote from Thomas Edison (“Genius is one percent inspiration and ninety-nine percent perspiration.”) has 14 tokens:

Figure 5.3 – Tokenization of sentence

We used the OpenAI Tokenizer tool to calculate the tokens; the tool can be found at https:// platform.openai.com/tokenizer. An alternative way to tokenize text (programmatically) is to use the Tiktoken library on Github; this can be found at https://github.com/openai/

tiktoken.