Chunking strategies

In our last discussion, we delved into vector DBs and RAG. Before diving into RAG, we need to efficiently house our embedded data. While we touched upon indexing methods to speed up data fetching, there’s another crucial step to take even before that: chunking.

What is chunking?

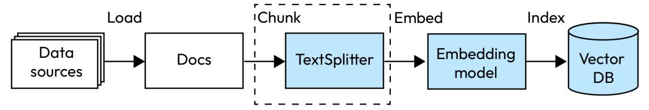

In the context of building LLM applications with embedding models, chunking involves dividing a long piece of text into smaller, manageable pieces or “chunks” that fit within the model’s token limit. The process involves breaking text into smaller segments before sending these to the embedding models. As shown in the following image, chunking happens before the embedding process. Different documents have different structures, such as free-flowing text, code, or HTML. So, different chunking strategies can be applied to attain optimal results. Tools such as Langchain provide you with functionalities to chunk your data efficiently based on the nature of the text.

The diagram below depicts a data processing workflow, highlighting the chunking step, starting with raw “Data sources” that are converted into “Documents.” Central to this workflow is the “Chunk” stage, where a “TextSplitter” breaks the data into smaller segments. These chunks are then transformed into numerical representations using an “Embedding model” and are subsequently indexed into a “Vector DB” for efficient search and retrieval. The text associated with the retrieved chunks is then sent as context to the LLMs, which then generate a final response:

Fig 4.12 – Chunking Process

But why is it needed?

Chunking is vital for two main reasons:

- Chunking strategically divides document text to enhance its comprehension by embedding models, and it boosts the relevance of the content retrieved from a vector DB. Essentially, it refines the accuracy and context of the results sourced from the database.

- It tackles the token constraints of embedding models. For instance, Azure’s OpenAI embedding models like text-embedding-ada-002 can handle up to 8,191 tokens, which is about 6,000 words, given each token averages four characters. So, for optimal embeddings, it’s crucial our text stays within this limit.

Popular chunking strategies

- Fixed-size chunking: This is avery common approach that defines a fixed size (200 words), which is enough to capture the semantic meaning of a paragraph, and it incorporates an overlap of about 10–15% as an input to the vector embedding generation model. Chunking data with a slight overlap between text ensures context preservation. It’s advisable to begin with a roughly 10% overlap. Below is a snippet of code that demonstrates the use of fixed-size chunking with LangChain:

text = “Ladies and Gentlemen, esteemed colleagues, and honored \ guests. Esteemed leaders and distinguished members of the \ community. Esteemed judges and advisors. My fellow citizens. Last \ year, unprecedented challenges divided us. This year, we stand \ united, ready to move forward together”

from langchain.text_splitter import TokenTextSplitter

text_splitter = TokenTextSplitter(chunk_size=20, chunk_overlap=5)

texts = text_splitter.split_text(text)

print(texts)

The output is the following:

[‘Ladies and Gentlemen, esteemed colleagues, and honored guests. Esteemed leaders and distinguished members’, ’emed leaders and distinguished members of the community. Esteemed judges and advisors. My fellow citizens.’, ‘. My fellow citizens. Last year, unprecedented challenges divided us. This year, we stand united,’, ‘, we stand united, ready to move forward together’]

- Variable-size chunking: Variable-size chunking refers to the dynamic segmentation of data or text into varying-sized components, as opposed to fixed-size divisions. This approach accommodates the diverse structures and characteristics present in different types of data.

- Sentence splitting: Sentence transformer models are neural architectures optimized for embedding at the sentence level. For example, BERT works best when chunked at the sentence level. Tools such as NLTK and SpaCy provide functions to split the sentences within a text.

- Specialized chunking: Documents, such as research papers, possess a structured organization of sections, and the Markdown language, with its unique syntax, necessitates specialized chunking, resulting in the proper separation between sections/pages to yield contextually relevant chunks.

- Code Chunking: When embedding code into your vector DB, this technique can be invaluable. Langchain supports code chunking for numerous languages. Below is a snippet code to chunk your Python code:

from langchain.text_splitter import (

RecursiveCharacterTextSplitter,

Language,

)

PYTHON_CODE = “””

class SimpleCalculator:

def add(self, a, b):

return a + b

def subtract(self, a, b):

return a – b

- Using the SimpleCalculator calculator = SimpleCalculator() sum_result = calculator.add(5, 3) diff_result = calculator.subtract(5, 3)

“””

python_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.PYTHON, chunk_size=50, chunk_overlap=0

)

python_docs = python_splitter.create_documents([PYTHON_CODE]) python_docs

The output is the following:

[Document(page_content=’class SimpleCalculator:\n def add(self, a, b):’),

Document(page_content=’return a + b’),

Document(page_content=’def subtract(self, a, b):’),

Document(page_content=’return a – b’),

Document(page_content=’# Using the SimpleCalculator’),

Document(page_content=’calculator = SimpleCalculator()’),

Document(page_content=’sum_result = calculator.add(5, 3)’),

Document(page_content=’diff_result = calculator.subtract(5, 3)’)]

Chunking considerations

Chunking strategies vary based on data type and format and the chosen embedding model. For instance, code requires a distinct chunking approach compared to unstructured text. While models such as text-embedding-ada-002 excel with 256- and 512-token-sized chunks, our understanding of chunking is ever-evolving. Moreover, preprocessing plays a crucial role before chunking, where you can optimize your content by removing unnecessary text content, such as stop words, special symbols, etc., that add noise. For the latest techniques, we suggest regularly checking the text splitters section in the LangChain documentation, ensuring you employ the best strategy for your needs

(Split by tokens from Langchain: https://python.langchain.com/docs/modules/ data_connection/document_transformers/split_by_token).