A deep dive into vector DB essentials

To fully comprehend RAG, it’s imperative to understand vector DBs because RAG relies heavily on its efficient data retrieval for query resolution. A vector DB is a database designed to store and efficiently query highly dimensional vectors and is often used in similarity searches and machine learning tasks. The design and mechanics of vector DBs directly influence the effectiveness and accuracy of RAG answers.

In this section, we will cover the fundamental components of vector DBs (vectors and vector embeddings), and in the next section, we will dive deeper into the important characteristics of vector DBs that enable a RAG-based generative AI solution. We will also explain how it differs from regular databases and then tie it all back to explain RAG.

Vectors and vector embeddings

A vector is a mathematical object that has both magnitude and direction and can be represented by an ordered list of numbers. In a more general sense, especially in computer science and machine learning, a vector can be thought of as an array or list of numbers that represents a point in a certain

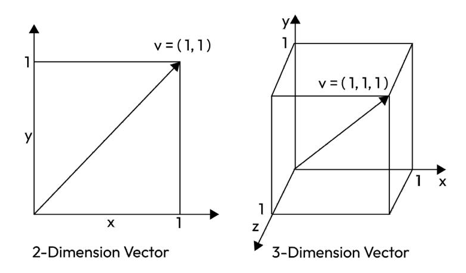

dimensional space. For instances depicted in the following image, in 2D space (on the left), a vector might be represented as [x, y], whereas in 3D space (on the right), it might be [x, y, z]:

Figure 4.2 – Representation of vectors in 2D and 3D space

Vector embedding refers to the representation of objects, such as words, sentences, or even entire documents, as vectors in a highly dimensional space. A highly dimensional space denotes a mathematical space with more than three dimensions, frequently used in data analysis and machine learning to represent intricate data structures. Think of it as a room where you can move in more than three directions, facilitating the description and analysis of complex data. The embedding process converts words, sentences, or documents into vector representations, capturing the intricate semantic relationships between them. Hence, words with similar meanings tend to be close to each other in the highly dimensional space. Now, you must be wondering how this plays a role in designing generative AI solutions consisting of LLMs. Vector embeddings provide the foundational representation of data. They are a standardized numerical representation for diverse types of data, which LLMs use to process and generate information. Such an embedding process to convert words and sentences to a numerical representation is initiated by embedding models such as OpenAI’s text-embedding-ada-002. Let’s explain this with an example.

Leave a Reply